Welcome Note

Rajan Sethuraman, CEO, LatentView Analytics

Rajan kicked off LatentView Analytics’ 2023 Analytics Roundtable in San Francisco, United States; this was the 14th edition of our Roundtable series. The theme of this year’s event was: Breaking Silos, Amplifying Results through Data.

Since the last Roundtable in July 2022 and after going public, LatentView experienced significant momentum; it has built on its specific industry vertical expertise and offerings. This includes a sharp focus on data engineering – one of the company’s core competencies and value propositions.

Rajan touched upon the overarching theme of this year’s Roundtable: Democratizing Data. He stated that the key purpose of the Roundtable was to reinforce the vital importance for enterprises to break down enterprise data silos regardless of vertical and horizontal – from supply chains to customer service to marketing and advertising.

As part of its ongoing efforts to address the most pressing needs of today’s digital-first companies, LatentView teased plans for a major growth marketing focus and solutions offering. Rajan stated that LatentView has undertaken a vast amount of marketing analytics work over the past 15 years, but the company is focusing heavily on empowering its customers to supercharge their growth-marketing initiatives through data analytics.

Keynote Address: Measurement in the Age of Stronger Privacy Policies

Speaker: Harikesh Nair – Director, Data Science, Ads Measurement, Google

“There’s a groundswell of change happening in marketing and advertising, and the industry is rapidly shifting from being just data driven to much more privacy focused due to consumer demand and emerging regulations.” Harikesh Nair set the tone of his keynote with this observation. And he defined this new emerging paradigm as “Privacy Preserving Advertising,” which requires change from all players in the ecosystem, including advertisers, publishers and adtech companies.

The key challenges facing the industry tied to privacy are related to attribution, reach, trust, and measurement. Measurement powers a lot of the advertising world. Why Harikesh considered this very important is because advertisers need measurement to know where to invest. Publishers need measurement to showcase the value of their inventory to advertisers. And adtech companies need measurement to optimize spend and demonstrate value to clients.

For the longest time, the entire advertising ecosystem relied on third-party cookies, but there are major changes underway with the end of cookies coming in 2024. The rise of privacy expectations and regulations are changing the way consumers are tracked online and this is (and will continue) affecting the quality and scope of available consumer data.

The good news, as Harikesh elucidated, is that companies are working on solutions, but the downside is that not all of them are viable long-term or align with evolving regulations. Google (and others) are shifting focus to “Privacy Preserving Technologies” that don’t rely on cookies. Based on this shift, he shared that the future of advertising will rest upon three core pillars:

- Good first-party (and zero-party) data will be critical. This data must be based on a value exchange with consumers.

- Automation will be increasingly important to target and measure data coming from new sources.

- Privacy Preserving Technologies (e.g., Google’s Privacy Sandbox and others) will be important, with new APIs made available to all downstream players.

Internet browsers and operating systems will increasingly act as the agent for the consumer and will send information downstream. Advertisers will not be able to track the same user across the Internet, which completely changes the attribution game. This will require advertisers to depend more on event-level data and aggregate data (location-based, behavioral, and contextual signals). Harikesh summarized that going forward, privacy-preserving technology and methodologies will become more prevalent and will subsequently give birth to more advanced AI/ML to reduce data noise and bring all this siloed data together.

Panel discussion 1: Connected Data – The Foundation of a Resilient Supply Chain

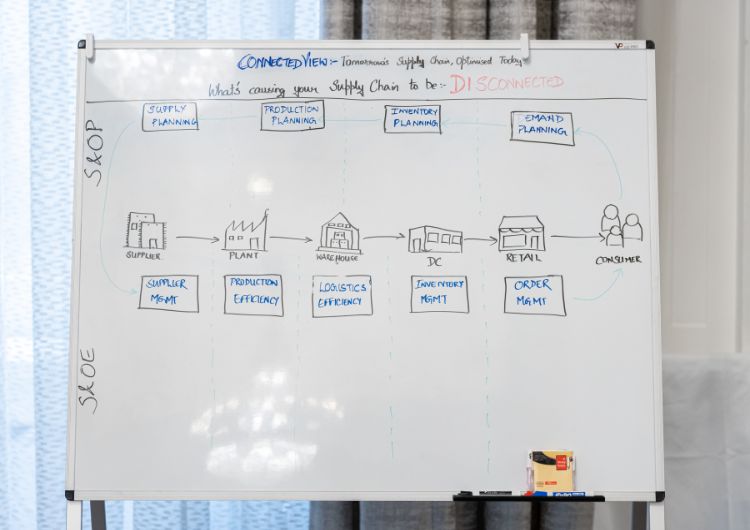

For years, supply chain professionals have been responsible for mitigating minor risks and balancing supply and demand across the value chain. Recent pressures on our global supply chain have made it difficult to find balance. Part of the problem is a lack of understanding about what supply chain resilience really means and how to define it.

In the face of rapid economic and technological change, the definition and parameters around supply chain resilience have evolved. The panel discussion on Connected Data – The Foundation of a Resilient Supply Chain started with the participants deliberating on what supply chain resilience means, how to measure it, and how to build a future-ready supply chain network. Each one shared a supply chain disruption they observed, experienced, or learned in the past 12–18 months, and which made them think:: “Why did we not see this coming?”

According to Pavan Heda from Johnson & Johnson, it’s one thing to have a solid business continuity plan when a crisis hits. During the pandemic, J&J was able to move quickly and shift operations from at one plant from manufacturing mouthwash into manufacturing hand sanitizer.

However, as the pandemic proved (it was a curveball no business was truly prepared to react to), businesses need to think about supply chain resilience from a proactive POV. If you only take a reactive approach, you will always be on your heels – especially as risk can be anything and it can come from anywhere at any time. No company knows what risks will converge at the same time.

It’s therefore increasingly important for supply chain leaders to lean into data to measure vulnerability. This includes looking at everything across your entire supply chain: suppliers, plants, distribution centers, etc., to determine which areas across the value chain present vulnerabilities. The reality, however, is that a true strategy for proactive resilience doesn’t happen overnight. It requires alignment among leadership to first identify your supply chain vulnerabilities (via democratizing data across the organization) and then ranking those risks so you can prioritize action.

Another result of the pandemic for many companies was a sudden and significant drop in order volume. This, of course, all depends upon your line of business. Companies manufacturing face masks and sanitizers obviously scrambled to meet demand. According to Raj Menon, a former executive at Sun Hydraulics, they weren’t prepared to anticipate the drop in demand that happened at the pandemic’s onset. Likewise, when demand went back up it was also hard to adjust.

In the B2B world, trust is the cornerstone of all supplier-buyer relationships. Suppliers trust that their buyers can accurately forecast demand (which is reliant on data democratization), and buyers trust that their suppliers can deliver the needed goods. This mutually beneficial relationship hinges upon information and data. A disruption to this symbiosis will cause negative ripples across the entire supply chain.

A lot of enterprises today are using ERPs to ensure their financials are accurate and that products are delivered on time. The downside is that many of these systems don’t provide a comprehensive line-of-sight into all the available supply chain data, and unfortunately, a lot of that existing data goes unused.

The consensus was that the problem facing most enterprises is not a lack of data. Companies today have surplus data than they know what do with. They should be evaluating real-time data streaming architectures as they are becoming more sophisticated with platforms such as Snowflake and Apache Spark, which are enabling companies to optimize their DataOps and not just store more data via the cloud but move data from insight to action. These data cloud solutions are helping companies move from collecting to connecting data by breaking down data silos that are the root cause of disconnected supply chains.

Panel discussion 2: Democratizing Data – Importance of Cross-Functional Collaboration

Ram Balasubramanian – Co-founder, Mantrah

Henry Bzeih – Global Chief Strategy Officer Mobility, Automotive & Transportation Industry, Microsoft

Kavita Vazirani – Head of Research, Insights and Analytics, The Walt Disney Company

Moderator: Vivek Singh – Head of Growth, Technology, LatentView Analytics

The high-powered panel on Democratizing Data – Importance of Cross-Functional Collaboration discussed the importance of data democratization and touched upon how organizational silos and a legacy culture of data “ownership” prevent true progress.

Many companies are starting to understand the importance of data democratization, but organizational silos and a legacy culture of data “ownership” prevent true progress in many cases. Southwest’s scheduling technology, SkySolver, that grounded 8,000+ flights this past holiday season, is an example of what can happen when companies rely on outdated, disparate data sources and architectures. The cause of much of this? Lack of a data culture.

More forward-thinking leaders are taking a proactive approach to break down data barriers between departments and data sources. There is a significant opportunity for analytics leaders to promote and spearhead data democratization efforts and to bake data into their company’s DNA. This is about changing behaviors and beliefs within an organization where everyone is encouraged to use data to improve decision-making in a way that benefits both data practitioners and non-data practitioners.

A major challenge for Kavita Vazirani from The Walt Disney Company was geographic data silos that existed at Comcast NBC with different P&Ls and marketing mix models. With 25 agencies buying media for Comcast at different rates, it became critical for the regions to work together to manage their media buying with some level of transparency around spend and ROI. This is where the idea of centralizing strategy and decentralizing information comes into play, and analytics leaders today must embrace relentless collaboration to succeed.

The byproduct of technological evolution is that data has become more siloed. 30 years ago, when all a company’s data resided in one place (on a mainframe), data silos were not the issue. Ram Balasubramanian from Mantrah worked for the analytics department of an airline and realized that in those days, it was knowledge that was siloed. Everyone had access to all the data, but there was a lack of understanding about how to use it in a cohesive and unified way to drive larger business objectives. He realized in that situation that moving people around the organization was the best way to break these knowledge silos. Today, things have become more complex. Data is physically siloed across CDPs, DMPs and other platforms, but institutional knowledge remains siloed too. This in many ways is a culture problem where IT and business leaders are not interacting with each other.

At legacy companies like SW Airlines, the problem stems from teams not seeing an urgent issue and therefore don’t see an immediate reason to change. Sometimes enterprises use data privacy concerns as an excuse to close data off internally to business users. However, what’s needed is more collaboration. New low-code and no-code platforms are helping to bridge the gap and are allowing for data to flow more freely across apps and decision making for CRM, internal workflows and approvals that used to be bottlenecked with IT.

Things like data visualization tools must be built with the input and knowledge from businesspeople to truly identify the desired business goals. For any company investing in data tools, the data and business teams need to work together transparently (and have access to decentralized data) to succeed.

Companies can also look to set up data Centers of Excellence (CoEs) that tap into cross-functional collaboration and data sharing to drive innovation. Like anything, cultural change takes time and doesn’t happen overnight. A solid strategy is to tackle one thing at a time and view data democratization as a journey. Once you tackle one silo, it catches on with stakeholders and leadership and the next one will fall much easier as the value becomes more apparent.

Fireside Chat: Data for the Greater Good

Krishnan set up the fireside chat with the perspective that “Data for the Greater Good” is a broad topic and means a lot of things when it comes to analytics. It can refer to the greater good of an organization and its processes. It can be for the greater good of an organization’s people. And it can also be for the greater good of society – be it tackling a disease or environmental issues.

Yelak shared a brief overview of his background and career spanning IT and business leadership positions at some of the largest and well-known consumer brands including PepsiCo and Walmart, as well as his current role with the International Myeloma Foundation (IMF), a non-profit organization dedicated to improving the quality of life of myeloma patients while working toward prevention and a cure. Yelak’s role with the IMF is uniquely personal as a myeloma survivor. Throughout his career, he’s witnessed many examples where breaking down data silos was critical to success.

Data for the greater good of processes in organizations: At PepsiCo, Yelak helped work on an inventory management initiative to forecast demand. The company wanted to understand the entire timeline and supply chain, right from when a potato is extracted from the ground to when it lands on a shelf in a bag of chips. Breaking down data silos was key to understanding demand forecasting. With PepsiCo’s inventory automation and diagnostics tool, they not only broke down data silos but also used data to free up money that they could reinvest elsewhere by slashing their days with excess inventory from 28 to 7 days. This was a global initiative across all the PepsiCo businesses. Another example that illustrates the importance of data democratization involves the chip flavor decision process. PepsiCo ran a challenge where consumers could submit and vote on a new chip flavor. PepsiCo would produce and launch the winning flavor all in a week after the contest ended. This required data sharing across R&D, marketing, merchandising, packaging, etc., to be able to launch on time.

Data for greater good of people in organizations: At Walmart, Yelak moved from an IT role into a business role with HR. When he first started on the people analytics side, the company was generating thousands of reports based on employee attendance, scheduling, etc., that required answers from regional managers. By the time the reports were refreshed, it was all old information. This used to take a long time as it required data from all kinds of disconnected sources across regions and the entire country/world. They decided to do something outside of the box and took the 20 most-asked questions for managers and built a “People Bot” to democratize data. Using conversational AI, managers could get answers for data on how many people called in sick and other data without having to ask every regional manager individually.

Data for the greater good of society (benefit of cancer patients): Yelak moved from the business to the nonprofit world and his personal story is compelling (as a myeloma patient). His main goal with the IMF is to ensure that the patient population is not viewed as monolithic. When companies over-index on generalized personas and segments and don’t use more granular data to personalize communication and experiences, then all the data in the world will still fall flat. Regardless of the touchpoint, IMF aims to provide a holistic personalized experience for patients using data. When people are diagnosed with cancer, there is often a lack of hope. The mission is to reduce that “time to hope” and measure what is required (education, connecting them with other patients that look like them across various demographic factors and where they are in their journey) all to overcome obstacles and increase the patient’s life span.

***

The takeaways from LatentView’s Roundtable were clear: To compete and thrive in a highly competitive digital economy, companies must embrace a mindset and execution plan for data democratization. This requires exploring and tapping into new data sources in the face of emerging privacy regulations, taking a proactive stance to business continuity and resilience using data, leveraging cloud-native solutions and platforms to unify and accelerate decision making, and pushing to build a data-centric culture from the top down and the ground up.