EvoLV Investor Day

Location: Mumbai

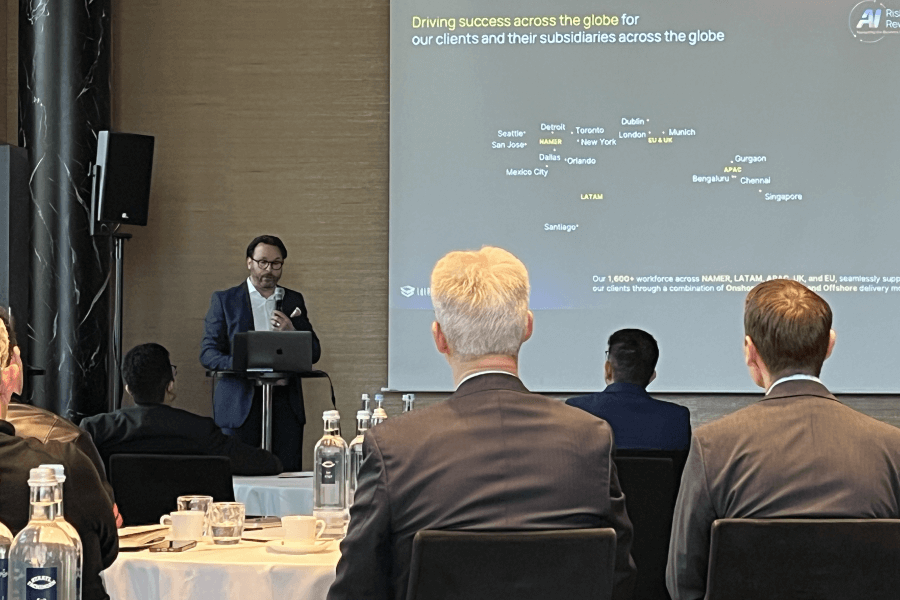

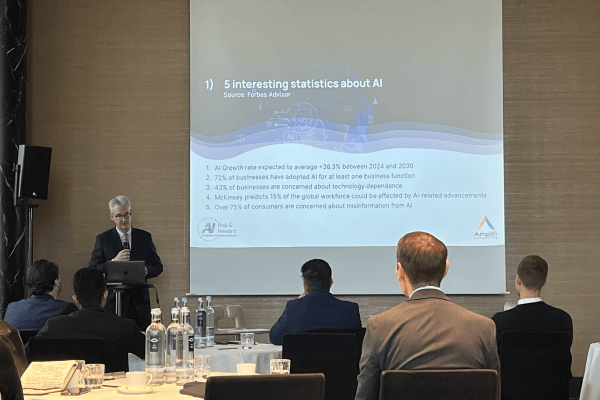

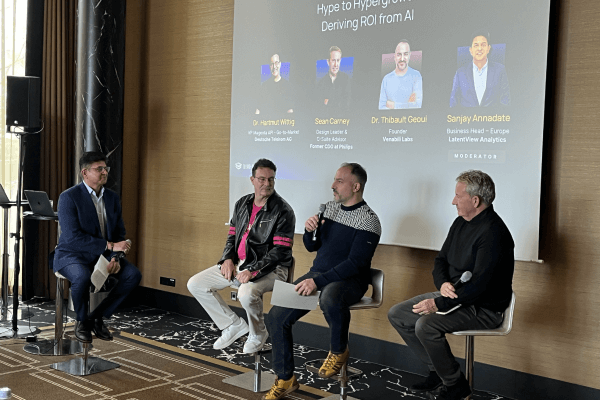

The three-year anniversary of our historic IPO saw another milestone in our journey – LatentView’s first Investor Day – EvoLV. The event brought together over 150 of our valued institutional investors for an in-depth look at our vision, solutions, and the market trends shaping the future. We highlighted how our strategic positioning and advanced analytics solutions drive innovation and value creation across industries.