History shows predictions of technological advancements that put the future on a high pedestal. Predictions regarding Artificial Intelligence (AI) are no different. Early AI researchers predicted a future where we would see robots that would walk, talk, and have perfect human qualities. There is no doubt we have had some ground-breaking advancements in Machine Learning, but AI just isn’t there yet.

In 1957, the computer science pioneer and economist Herbert Simon said, “It is not my aim to surprise or shock you—but the simplest way I can summarize is to say that there are now in the world machines that can think, that can learn and that can create. Moreover, their ability to do these things is going to increase rapidly until – in a visible future – the range of problems they can handle will be coextensive with the range to which the human mind has been applied.” [1]

But the world where machines think like humans is far off. Artificial Intelligence excels in many tasks, including beating the world’s best Go player thrice, but is it considered intelligent if it cannot do the things that a toddler can?

Moravec’s Paradox: Why are Simple Tasks Hard for AI?

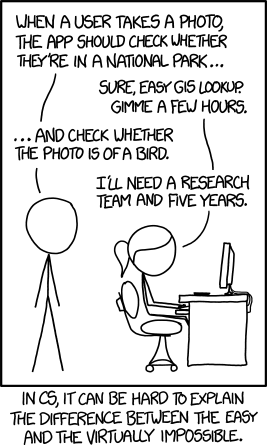

The problem is not new. This challenge was laid out by the computer scientist Hans Moravec, after whom the ‘Moravec’s Paradox’ is named. He stated that logical rationalization requires little computation, but sensorimotor skills require enormous computational resources.

In his book [2] published in the 1980s, he wrote, “The deliberate process we call reasoning is, I believe, the thinnest veneer of human thought, effective only because it is supported by this much older and much more powerful, though usually unconscious, sensorimotor knowledge.”

Simply put, our brains are the products of millions of years of evolution and natural selection. The things that humans find hard are only hard because they are new. The skills that we already acquired through evolution come to us so naturally that we do not have to think about it. How exactly are we going to teach a machine the things that we do not even think about? As Polanyi famously said, “We can know more than we can tell.”

Being a human is much easier than building one. Take something as simple as playing catch with a friend. When you break down this activity into the discrete biological functions required to accomplish it, you will realize it is not quite as simple. You need sensors, transmitters, and effectors. You need to calculate the distance between your companion and yourself, the sun glare, wind speed, and nearby distractions. You need to decide how firmly to grip the ball and when to squeeze the mitt during a catch. You also need to process several what-if scenarios: What if the ball goes over my head? What if it hits the neighbor’s window?

Despite being able to do what we think is complex, AI still has its limitations and cannot compensate for the creative part of the human brain. Let us take the example of healthcare. Right now, would you trust a robot or a smart algorithm with a life-altering decision? Would you allow it to make a simple decision on whether to take painkillers, for that matter?

The NHS conducted an experiment to ease the burden on their health lines with chatbots. Patients participating in a trial indicated that they would play the system to get an appointment with the doctor faster rather than take a chatbot’s recommendations. That might change in the future, with patients trusting the AI for simple problems, but we might never be able to imagine healthcare without human empathy. We will always need doctors to hold our hands while telling us about life- changing decisions and showing their overall support. An algorithm can never replace that.

On a lighter note, books, and movies like Moneyball show us how data analytics can pick out winning sports teams. But can an AI be a top-level sports coach?

Here is where Moravec’s Paradox holds its weight. It is not only about what AI can do but also the effort that is required to teach AI how to perform relevant tasks and the significance of the effort applied.

How Moravec’s Paradox is Relevant Today

What AI cannot do right now is to go beyond the parameters of what it has learned. Humans, on the other hand, can use their imagination to dream new possibilities. AI cannot perform creative tasks such as writing a joke or telling an original story. Even the most mundane business decisions rely on

creativity. A bank administrator finding a management strategy that better engages its workforce or a hotel executive tweaking furnishing to match millennial customers’ lifestyle is not robot territory.

Negating the fear of it replacing us, AI, is not necessarily a bad thing. When we are free from menial work, the world slows down around us. We have more time to strategize, relate with clients, and optimize our processes to increase productivity and get a greater investment return. After all, it is not only about efficiency and effectiveness; it is about enabling innovation.

Moravec’s Paradox sure confirms one thing — the fact that we developed a computer to beat a human in Go or Chess does not necessarily mean that General Artificial Intelligence is just around the corner. Yes, we are one step closer, but over time it will only get harder. Humans’ tacit knowledge is a valuable asset that is not transferable, and humans will always remain relevant.

References

[1] 1957: When Machines that Think, Learn, and Create Arrived

[2] Moravec, H. (1988). Mind children: The future of robot and human intelligence. Harvard University Press.