Many enterprises using Databricks for ETL workflows face challenges with isolated data management across workspaces. This can lead to inefficiencies, data silos, and security concerns.

Unity Catalog, from Databricks, promises to revolutionize data governance by offering a centralized catalog. This blog post will explore the key features and benefits of Unity Catalog, helping you decide if migrating from the traditional Hive Metastore is the right move for your team.

Unity Catalog: A Centralized Approach

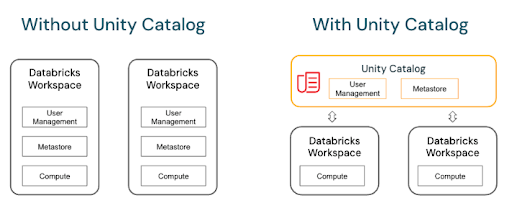

Databricks addresses the challenge of siloed workspaces with the Unity Catalog. Unity Catalog establishes a central catalog that can be accessed by all workspaces under the metastore. Here’s how it works:

- Create a UC-enabled Metastore: This acts as the central repository for all your data objects.

- Attach Workspaces: Integrate your existing Databricks workspaces with the UC-enabled metastore.

- Unified Catalog and Object Management: Create and manage catalogs, schemas, tables, and other data objects within the central Unity Catalog. These objects become accessible across all attached workspaces with appropriate permissions.

Steps for a Quick POC

There are two main options for migrating objects to the Unity catalog:

- Upgrade form UI: For existing Hive Metastore external tables, use the built-in upgrade option at the schema or table level to migrate them to the Unity Catalog.

- Sync Command: Alternatively, leverage SQL commands like SYNC SCHEMA or SYNC TABLE (with or without dry run) to migrate objects. These commands create corresponding objects within the Unity Catalog. The sync approach works for external tables. For managed tables, we need to clone the tables to the new managed location.

After successful execution, you’ll see a new object under the Unity Catalog pointing to the original data location (external for external tables, managed for managed tables).

Features of Unity Catalog

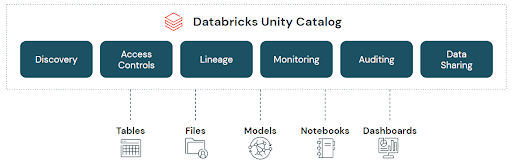

While Unity Catalog offers a centralized catalog similar to external solutions like Glue Metastore and Azure Data Catalog, its functionalities extend far beyond. It establishes a robust data governance framework with features that can be broadly categorized into four key areas:

- Data Discovery: Unity Catalog meticulously tracks and logs metadata for all activities within the platform. This comprehensive metadata allows users to leverage the search interface for efficient discovery of relevant data objects. Additionally, access controls are enforced for metadata, ensuring users only see information based on their permissions.

- Logging and Auditing: System tables provide valuable insights to answer critical data governance questions, such as:

- Data ownership (Who owns a specific dataset?)

- Data usage (How frequently is a table accessed? By whom?)

- Resource allocation (Compute usage by users/groups)

- Granular Access Control (Attribute-level access control is coming soon, eliminating the need for separate views to restrict access to specific data points)

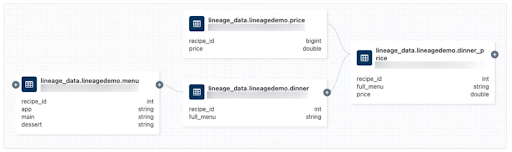

- Comprehensive Data Lineage: Unity Catalog goes beyond basic lineage tracking. It captures detailed metadata at the table and column level, identifying upstream and downstream data flows and transformations occurring on specific columns. It even tracks lineage from code written within the workspace (Spark, Python, etc.).

- Secure and Streamlined Data Sharing: Unity Catalog empowers business users to control data sharing. They can define how, why, and what data is shared, along with the designated recipients. This eliminates the insecure practices of data extraction to spreadsheets or sharing via email and chat applications. Unity Catalog leverages a platform-agnostic protocol called Delta Sharing to facilitate secure data sharing, fostering a culture of data governance within your organization.

Advanced Features and Migration Considerations

While Unity Catalog offers core functionalities like data discovery and governance, it also boasts some advanced capabilities:

- Customizable Dashboards Using the UC System Schemas: System schemas within Unity Catalog hold valuable data. However, these schemas are not enabled by default. Through API calls, you can activate them to leverage the data for building custom dashboards focused on usage, cost, and other relevant metrics.

- Integration with New Databricks Features: Latest Databricks features, such as predictive IO, deletion vectors, LakehouseIQ, and LLM models, rely on metadata from Unity Catalog. Migrating to Unity Catalog ensures compatibility with these innovative features.

So, Should You Migrate?

Given the enhanced control, unified governance, and access to advanced features, migrating to the Unity Catalog is highly recommended. Here’s a breakdown of the benefits:

- Centralized Management: Define and manage objects once for access across all connected workspaces.

- Robust Governance: Leverage built-in logging and auditing for improved data security and compliance.

- Future-Proof Platform: Unlock the potential of new Databricks features that rely on Unity Catalog metadata.

Making the Move with UCX

Migrating to Unity Catalog has become significantly easier with the introduction of UCX (Unity Catalog Migration Experience) by Databricks Labs in Q4 2023. UCX streamlines the process by providing the following:

- Automated Assessment: UCX runs jobs and crawls your metastore to assess the migration scope and generate estimates.

- Pre-built Dashboards: Gain insights into the migration process through readily available dashboards within UCX.

- Simplified Migration Jobs: Once the assessment is complete, UCX offers pre-built jobs to execute the actual migration.

Databricks offers several approaches to migrating to Unity Catalog, each catering to different needs:

- Lift and Shift (In-Place): This approach creates a UC database within the existing workspace housing the Hive Metastore. UCX facilitates object migration, allowing Hive and Unity Catalog to coexist until full adoption of Unity Catalog.

- New Workspace: Here, a new workspace is created with a dedicated UC database. Objects are migrated using UCX, and both workspaces point to the same data (external location). Jobs are run and validated in both workspaces before transitioning production environments.

- New Project Approach: This approach focuses on granular access control. You can define multiple Unity Catalogs (e.g., UC_Sales, UC_Marketing) aligned with your team structure, with access control set at the catalog level. Alternatively, you can define a single catalog per environment (Prod, Dev, Test) with schemas further created for different enterprise functions (e.g., Prod_Sales, Dev_Finance). The most suitable approach depends on your preferred access control hierarchy.

Post-Migration Considerations and Adoption Strategies

Migrating to Unity Catalog is just the first step. Here’s what to consider next:

- Hive Metastore Coexistence: After migration, the Hive Metastore remains functional. However, updates made there won’t automatically reflect in the Unity Catalog governance tables. To ensure tracking of these tables, encourage users and jobs to interact directly with the Unity Catalog. This means they have to start using a three level namespace like uc_catalog.schema.table in place of existing practice of using two level namespace.

- Driving User Adoption: Start by encouraging data analysts and business users to leverage Unity Catalog’s functionalities. Monitor usage and gradually revoke access to the Hive Metastore as adoption increases. Finally, migrate ETL jobs to Unity Catalog for a complete transition.

Unity catalog is supported by

- Clusters with Databricks Runtime 11.3 LTS or above

- SQL Warehouse (supported by default)

Real-world Success Story

LatentView Analytics helped a leading US software company migrate its legacy Hive Metastore to Unity Catalog. This transition provided valuable insights:

- Improved visibility into upstream and downstream data flows, which helped in reducing the time taken to document the lineage

- Eliminated single points of ownership for tables, thereby reducing data duplication and redundancy.

- Unity catalog unlocked features like user access monitoring and secure data sharing through Delta Sharing, which in turn helped govern how the data is shared and who has access to it.

- While the entire activity was a 4-month 2-person effort for upgrading 300+ Tables, 100+ Notebooks, and Jobs, with the introduction of UCX, the effort could be reduced by 60%

A Unified Path to Data Governance

By addressing the challenge of isolated workspaces, Databricks’ Unity Catalog establishes a central repository for data objects, streamlining management and fostering collaboration. Unity Catalog integrates seamlessly with innovative features like predictive IO and LakehouseIQ, ensuring your environment remains future-proof.

The introduction of UCX has significantly simplified the process, offering automated assessments, pre-built dashboards, and streamlined migration jobs. We recommend evaluating your specific needs and choosing the migration approach that best aligns with your access control structure. Once migrated, focus on driving user adoption and gradually phase out the Hive Metastore. By embracing the Unity Catalog, you unlock a unified platform for data management, empowering your teams to collaborate effectively and leverage the full potential of the Databricks ecosystem.