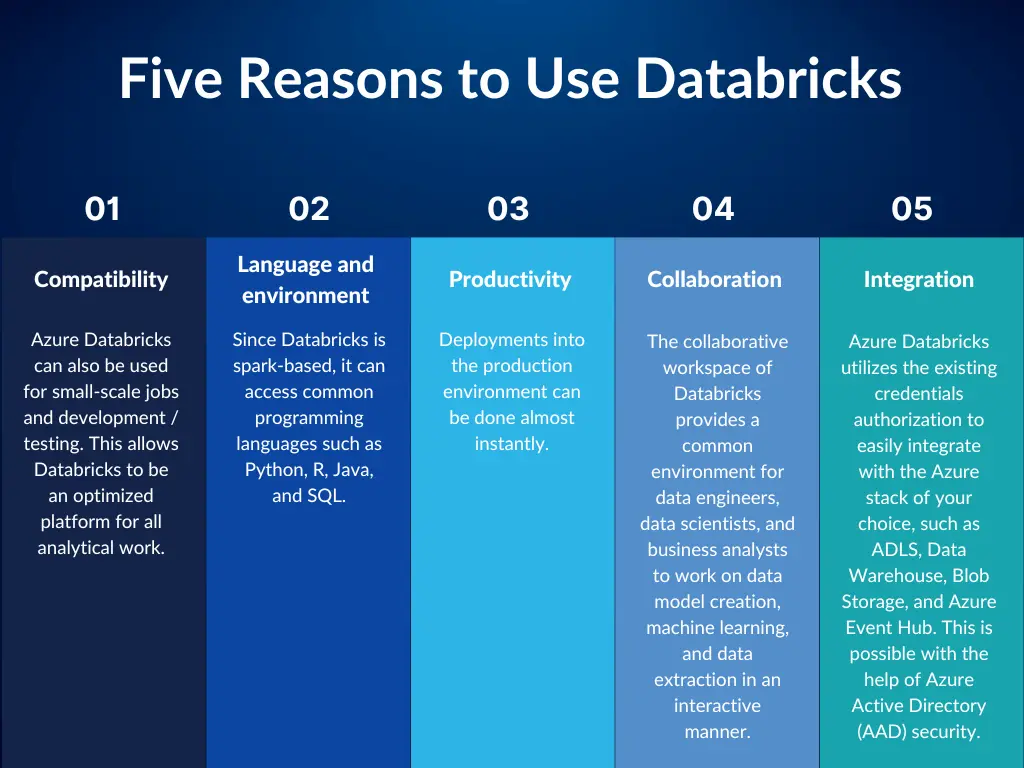

Databricks is a fully managed, cloud-based data engineering tool that processes and transforms massive data and explores data through machine learning (ML) models. In addition to Azure, Databricks is the latest big data tool for the Microsoft cloud.

Today, 5,000+ organizations rely on Databricks to enable massive-scale data engineering, collaborative data science, full lifecycle ML, and business analytics. Databricks is a first-party service on Azure that integrates seamlessly with other Azure services, such as Event Hubs and Cosmos DB. In addition, Azure Databricks is built on top of a fully managed Apache Spark environment, which provides global scaling capacity.

Delta Lake in Azure Databricks

Let’s take a use case where terabytes (TB) of Parquet format data must be pushed from one Azure storage account to another via Databricks. The Azure Data Factory (ADF) is a triggering mechanism for jobs in Databricks. Initially, the TB-sized data is placed in Azure storage, which acts as a source for the Databricks job to process the data. However, this huge dataset resulted in a need for faster queries, freshness, and reproduction of older data for concurrent runs. Therefore, Delta Lake is used to avoid these disadvantages.

What Is a Delta Lake?

In November 2017, Databricks company integrated with Microsoft Azure as a first-party service, providing Azure Databricks service. On April 24, 2019, Microsoft introduced a new open-source project called Delta Lake to deliver reliability and quality of data in data lakes by providing qualified data.

Delta Lake is a transactional storage layer that stores data in a versioned Parquet file format. Delta Lake also maintains a transaction log to track the records. In addition, Delta Lake promises to bring a layer of reliability to organizational data lakes by providing ACID (atomicity, consistency, isolation, and durability) transactions, data versioning, and rollback.

Delta Lake Architecture

The Delta Lake architecture is the next version of the Lambda architecture. It processes batch and streaming data in parallel, preventing data loss during ETL or other failures. In addition, Delta Lake with Databricks File System (DBFS) handles metadata with petabytes of scalable files and keeps the state of the data lake clean without including duplicate records.

The Delta Lake architecture works with DBFS to improve scalability and reliability. It provides unified data management processing, ACID transactions, and scalable metadata handling. Since the Lambda architecture already unifies streaming and batch data, Delta Lake has additional scalability features with low latency and handles large volumes of data. The architecture combines the best capabilities of a data lake, data warehousing, and a streaming ingestion system, thereby providing reliable analytical solutions. Data flowing into folders are categorized into bronze, silver, and gold layers and provide ACID-compliant transactions:

- Bronze layer: Ingestion of raw data from various sources that need to be filtered in subsequent layers

- Silver layer: Filtering and cleaning ingested data by joining various tables and providing refined data, thus improving streaming records

- Gold layer: An accessible layer often used for creating actionable insights and dashboards, loading a data warehouse, and generating reports of business metrics based on business requirements

To improve performance, the below steps were implemented on Delta Lake:

- Creating a temporary Delta Lake table in Databricks on the top of the data in Azure storage

- Creating a partitioned target table in Databricks

- Viewing creation for removing duplicates from source data

- Merging data into the target table using the already created view

- Running the optimize command on the target table using key columns

- Running the vacuum command on the target table

Fetching data directly from the data lake and performing the above actions results in several performance issues and is time-consuming. But with Delta Lake and its efficient management techniques, we can quickly complete the above actions.

Benefits of Delta Lake

Delta Lake enables developers to spend less time managing data partitions. And, since the data history is stored, it is much simpler to use the data versioning control layer to apply updates and deletions to the data. With the help of Delta Lake, incorporating new requirements and data changes will be easy. Delta Lake improves reliability, compatibility, and scalability and is easy to manage, which means the quality of data lake is clean and the data is safe, with benefits including:

- ACID transactions on the spark

- Scalable metadata handling

- Unified batch and stream processing

- Schema enforcement

- Time travel

- Audit history

- Full data manipulation language (DML) support